In the ever-evolving landscape of technology, privacy and convenience often stand on opposing sides of the sword. Recently, Meta has introduced a seemingly innovative feature allowing users to harness AI capabilities directly within their private messaging apps, including Facebook, Instagram, Messenger, and WhatsApp. While the concept of having AI assist in chats is appealing, it raises significant concerns regarding user privacy and data protection.

The Arrival of AI in Private Chats

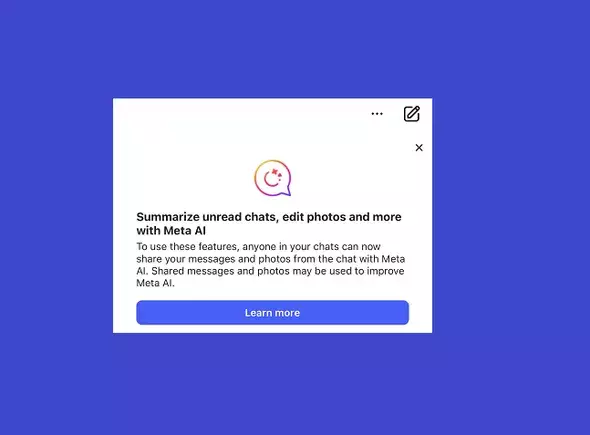

Meta has begun to advertise a feature that permits users to consult its AI by simply mentioning “@MetaAI” within their chats. This integration is designed to enhance the user experience by providing timely information and assistance in conversations. However, this seemingly beneficial addition comes with a catch: when users engage with Meta AI in a chat, they consent to the possibility of their messages being processed and stored for AI training purposes.

This new feature has sparked a flurry of discussions among users, especially concerning the implications for their private communications. The risk of sensitive information, ranging from passwords to personal anecdotes, inadvertently becoming fodder for AI processing is a legitimate concern. Meta has tried to address these anxieties by issuing a warning regarding the types of information users may want to withhold, asserting a degree of responsibility to guard against the unintended sharing of personal data.

A Flawed Benefit

Despite the appeal of having an AI assistant on hand to answer queries, the practicality of this feature is questionable. Many users might find it awkward to navigate the potential repercussions of using @MetaAI in their chats. Given the option to converse in a dedicated AI chat window instead, the benefits of integrated AI within private conversations may not justify the risks associated with exposing chat data to Meta’s algorithms.

Moreover, this alert from Meta raises a critical point: the inherent ambiguity in what constitutes sensitive information. What one person considers trivial, another may view as private. Thus, users are left to tread lightly—balancing the utility of AI responses against the threat of privacy intrusions.

A significant layer of complexity lies in understanding user consent. When signing up for Meta’s services, users often click through extensive terms and conditions filled with legal jargon, commonly leaving them unaware of how their data may be utilized. Meta’s pop-up notification serves as a reminder that users had previously given their consent to such measures, albeit without full awareness of the implications. This raises ethical questions about the transparency of data practices and the degree to which companies like Meta should be held accountable for user privacy.

For many users, the lack of a straightforward opt-out mechanism further complicates the matter. To sidestep the possibility of their messages being utilized for AI training, users must either refrain from asking @MetaAI questions, delete messages, or discontinue using Meta’s apps altogether. The options available are far from ideal and illustrate the challenges that come with technology advancements that prioritize engagement over user trust.

So, what can users do to safeguard their privacy in light of these developments? First and foremost, awareness is key. Users must remain vigilant about what information they choose to share within chats, particularly when engaging with AI features. Taking a proactive approach—such as utilizing separate channels for interacting with AI—can mitigate potential risks.

Ultimately, the responsibility lies not only with Meta to be up front about its data usage policies but also with users to stay informed and exercise their judgment when it comes to their digital footprints. The interplay between convenience and privacy will continue to be a hot topic of debate as technology undergoes rapid changes.

While Meta’s integration of AI tools into its messaging platforms represents a leap forward in utilizing technology to enhance user experience, it simultaneously presents a substantial risk to privacy standards. Users are encouraged to voice their concerns, demand clearer communication around data policies, and remain vigilant about the potential repercussions of their digital interactions. The balance between innovation and privacy is an ongoing negotiation, and individual empowerment through informed choices remains paramount.