Artificial intelligence has revolutionized content creation, offering unprecedented freedom and versatility. However, this power is a double-edged sword, especially when safeguards appear superficial or entirely absent. The recent proliferation of AI-driven video and image generators, such as Grok Imagine, exposes glaring vulnerabilities in industry promises of responsible AI usage. While companies claim to implement safeguards against harmful or inappropriate content, the reality often tells a different story—one where creators and malicious actors can easily bypass restrictions, fueling concerns over ethical accountability and potential misuse.

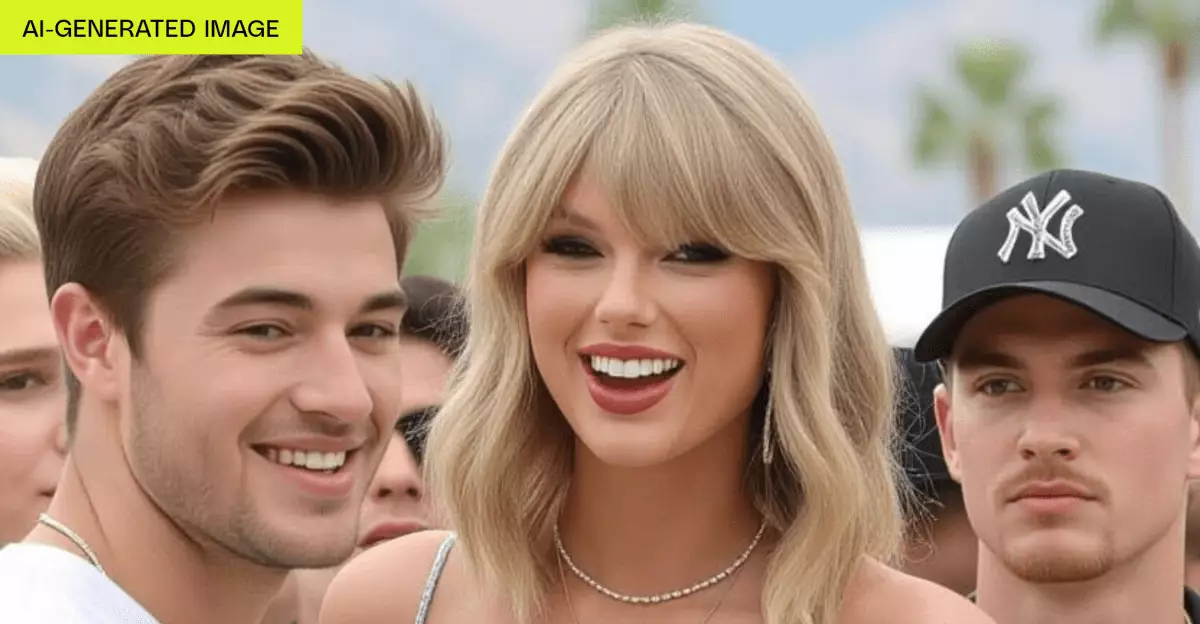

The crux of the problem lies in the superficial implementation of safety measures. When an AI tool allows users to produce suggestive or NSFW content with minimal oversight, it not only undermines societal standards but also sets dangerous precedents. The fact that Grok Imagine freely generates uncensored nudity, including suggestive images of celebrities like Taylor Swift without stringent verification, underlines a troubling disconnect between perceived and actual safeguards. Such lax controls invite a host of issues—from the proliferation of deepfakes used for harassment or misinformation to exploitation of minors and vulnerable individuals. This isn’t merely a technical oversight but a profound ethical lapse that jeopardizes public trust in AI.

Regulatory Gaps and the Illusion of Control

Current regulations, like the Take It Down Act, attempt to establish boundaries for AI-generated content, but enforcement remains inconsistent. Many companies highlight their policies banning explicit depictions of individuals or the creation of pornographic material. Yet, these statements often ring hollow when the technology’s design favors user discretion over genuine safety. Grok Imagine’s failure to enforce its acceptable use policy, exemplified by its loophole-ridden age verification system, demonstrates that regulatory compliance can be superficial at best.

The ease with which users can bypass age checks and generate adult content reveals a critical flaw: the absence of robust identity verification mechanisms. Basic technical barriers are insufficient if the underlying system remains inherently permissive. Consequently, anyone with a smartphone and a subscription can access potentially damaging content without meaningful oversight. This rampant ease of access desperately exposes the ineffectiveness of self-regulatory models, raising urgent questions about the need for stricter legislative intervention and accountability.

The Ethical Crisis Beneath Cutting-Edge Innovation

Technological innovation should come with a social contract—an implicit understanding that safety and responsibility are prioritized. Sadly, the current landscape suggests corporations prioritize profit and novelty over ethical considerations. The seductive allure of offering “spicy” modes or unrestricted features pushes companies to ignore the potential harm their products can cause. Allowing AI to generate deepfake celebrity images or suggestive videos without genuine safeguards risks normalizing malicious uses, from revenge porn to misinformation campaigns.

Furthermore, there’s an alarming inconsistency in how these tools handle sensitive content. While outright nudity may be limited or blacked out, suggestive images and implied sexual content are often still generated with ease. The ambiguity inherent in these features fosters an environment where boundary-pushing is normalized, blurring ethical lines. This creates a dangerous precedent: if creators can easily craft and disseminate manipulated media that appears authentic, the foundation of truth and trust erodes further.

Responsibilities of Developers and Regulators

It’s becoming increasingly clear that the responsibility for mitigating these risks cannot rest solely on technological safeguards or voluntary policies. Developers must embed responsible AI principles deeply into their architectures, employing not just superficial filters but comprehensive identity and content verification systems. Equally important is proactive monitoring and swift removal of harmful content, even if that means limiting certain functionalities for the sake of safety.

Regulators, too, have a critical role. Laws and guidelines must evolve to address the realities of AI abuse, establishing clear penalties for violations and mandating transparency in how these systems operate. Relying on self-policing has proven ineffective; systemic oversight and enforceable standards are now imperative to prevent the kind of unchecked misuse seen in platforms like Grok Imagine.

Emerging discussions around AI ethics often emphasize the importance of creator responsibility, but the truth is that technology’s capacity to do harm dwarfs individual accountability. Companies must recognize that their tools are powerful multipliers of human intent—both good and bad—and develop accordingly robust defenses. Without this, the promises of safe, responsible AI remain just that—empty promises. The future of AI-generated content hinges not only on technological innovation but also on a collective commitment to ethical stewardship.