The integration of technology into education has long been portrayed as a inevitable ascent towards a brighter, more inclusive future. From Apple’s pioneering initiatives in the 1980s to the widespread deployment of digital devices today, the narrative has largely centered around progress and opportunity. Yet, beneath the glossy facade lies an unsettling question: Are these technological advancements genuinely enhancing learning, or are they subtly undermining the fundamental skills that form the backbone of education?

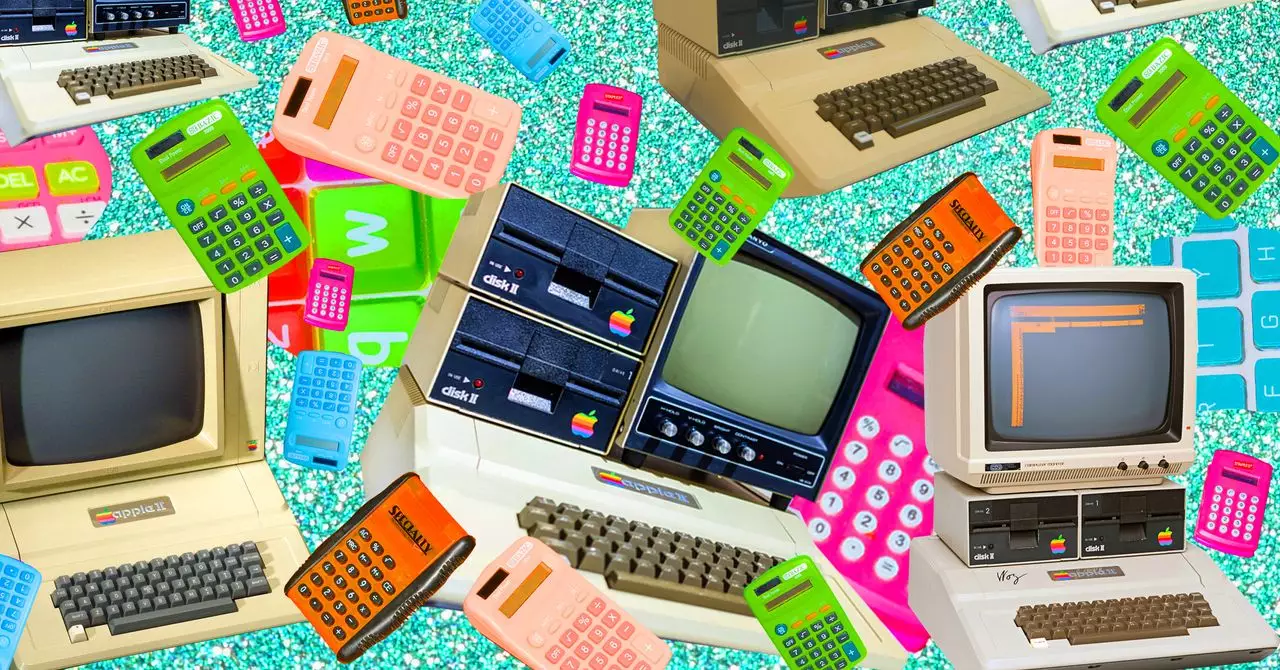

Apple’s early role in transforming classrooms exemplifies this complex dynamic. By donating thousands of Apple IIe computers under programs like Kids Can’t Wait, the company positioned itself as a catalyst for change. The initial vision was clear—equip students with digital tools and prepare them for a tech-driven world. However, the rapid increase in device-to-student ratios, from a mere 1:92 in 1984 to nearly 1:4 by 2008, indicates a relentless push towards device ubiquity. While access to technology is essential, one must question whether quantity equates to quality. Are students truly engaging in meaningful learning, or are screens replacing critical thinking and interpersonal skills?

This boom in digital tools does not come without its critics. Some educators and scholars, like A. Daniel Peck, warn of an overreliance on computers that could dilute essential skills. Their concerns are rooted in the conviction that the fervor for integrating tech has sometimes overshadowed pedagogical fundamentals. The “computer religion,” as Peck terms it, suggests an unquestioning faith in technology’s efficacy—an ideology that can blind us to its potential pitfalls. Is the obsession with adopting every new device a case of technology for technology’s sake, rather than driven by educational needs?

The Costly Mirage of Technological Panacea

Beyond pedagogical debates, the financial implications of educational technology are staggering. Interactive whiteboards, initially introduced to classrooms in the early 2000s, symbolize this trend: attractive, high-tech, but fraught with questions about value and priorities. By 2009, nearly one-third of K-12 classrooms featured these devices, with sales ballooning worldwide. Nevertheless, the high costs—ranging from $700 to over $4,500 per unit—pose a critical dilemma. Is this expenditure justified when compared to other potential investments, such as personalized learning tools or teacher training?

Economic skepticism extends further in conversations about infrastructure and ongoing maintenance, contrasting sharply with the seemingly endless stream of new devices that schools feel compelled to acquire. Critics argue that these resources could be redirected more effectively—towards fostering individualized, project-based learning experiences or addressing socioeconomic disparities. The focus on whiteboards and similar equipment often emphasizes teacher-led instruction, potentially stifling student agency and collaboration. Are these gadgets serving as gateways to active inquiry, or are they merely symbols of technological excess?

Meanwhile, the internet’s widespread adoption has been heralded as a transformative milestone—yet it is equally riddled with concerns. During the 1990s and early 2000s, government initiatives like the E-Rate program sought to subsidize internet access, dramatically improving connectivity in schools. However, accessibility does not automatically guarantee effective learning experiences. The digital environment presents risks—distractions, misinformation, and exposure to inappropriate content—that many educators and parents overlook in the rush to modernize.

The narrative here is nuanced: While internet access has opened unprecedented avenues for resource sharing and global connectivity, it also exposes vulnerabilities that can hinder genuine, focused learning. The challenge lies in managing this double-edged sword—harnessing its potential without succumbing to its pitfalls.

Questioning the Narrative: Are We Truly Advancing Education?

Despite technological advances and massive investments, the core purpose of education remains under siege. The early optimism about computers, whiteboards, and the internet transforming classrooms into hubs of creativity and critical thought faces an uneasy countercurrent: are we sacrificing fundamental skills for digital bells and whistles? Critics argue that the rush to digitize education risks overshadowing essential skills like reading, writing, and numerical literacy.

Furthermore, the adoption trends suggest a pattern of technological zealotry driven more by industry interests and political agendas than by educational outcomes. The lobbying efforts, subsidies, and reported enthusiasm from policymakers often mask the deeper concerns of educators who see these tools as distractions rather than catalysts for learning. The question isn’t just about technology’s availability but about its pedagogical integration—are these tools being used to promote genuine inquiry or to prop up outdated, teacher-centered models?

There’s also an emotional component to this debate. The excitement surrounding new devices can foster a “bandwagon effect,” pushing schools to adopt the latest technology without thoroughly evaluating its impact. Such rapid, sometimes impulsive adoption risks leaving behind students who come from underprivileged backgrounds that might not have the same access or support systems, thereby widening existing educational gaps.

The uncritical embrace of technology, coupled with its exorbitant costs, compels us to reflect: Are we building an educational future based on meaningful human interaction and foundational skills, or are we chasing a shiny mirage that may ultimately dilute the integrity of learning? It’s an urgent, ongoing conversation that demands deliberate, thoughtful consideration—one where critical voices should not be drowned out by the siren song of innovation.