The artificial intelligence landscape is rapidly evolving, particularly with the contributions from innovative startups. One such standout is DeepSeek, a Chinese AI startup making waves in the industry with its impressive open-source developments. Recently, the company unveiled its latest creation, DeepSeek-V3, a state-of-the-art ultra-large AI model that boasts a staggering 671 billion parameters. This article explores the transformative impact of DeepSeek-V3, its remarkable features, and what it means for the future of AI models.

DeepSeek-V3 represents a significant leap in the capabilities of open-source AI models. Its adoption of a mixture-of-experts architecture sets it apart from many contemporaries, enabling it to activate only specific parameters for each task—resulting in enhanced efficiency and accuracy. This selective activation helps the model manage the expansive number of parameters effectively, aligning with the need for both performance and resource optimization in today’s AI applications.

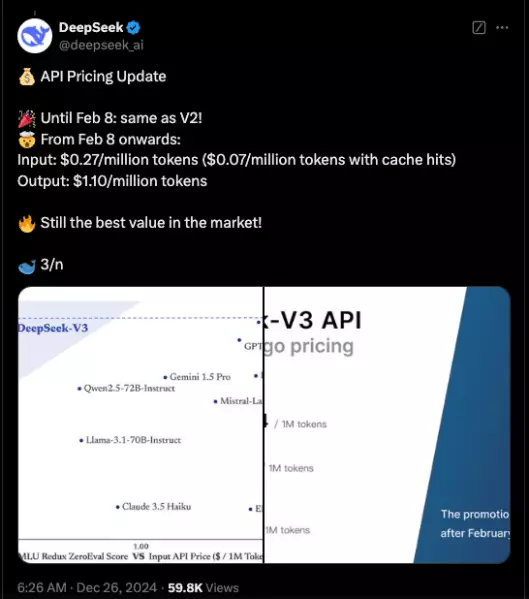

The results are impressive. Early benchmarks indicate that DeepSeek-V3 not only outpaces existing open-source models, such as Meta’s Llama 3.1-405B but also closely approaches the performance of proprietary models from heavyweight competitors like Anthropic and OpenAI. Such advancements hint at a promising trend where open-source systems might soon rival and even outperform their closed-source counterparts.

At the heart of DeepSeek-V3’s architecture lies the multi-head latent attention (MLA) mechanism paired with DeepSeekMoE. These foundational concepts drive both training and inference processes, with a notable focus on utilizing specialized “experts.” This model operates with 37 billion parameters activated per token, showcasing an optimal blend of specialization and shared expertise.

DeepSeek-V3 also introduces two revolutionary innovations that augment its capabilities. First, its auxiliary loss-free load-balancing strategy constantly monitors and regulates task distribution among the model’s experts. This dynamic approach fosters a balanced workload without degrading performance—an essential feature for high-demand applications. Second, the integration of multi-token prediction (MTP) allows the model to simultaneously predict multiple future tokens, enhancing efficiency and enabling lightning-fast response times of up to 60 tokens per second.

A distinct advantage of DeepSeek’s approach is its cost-effective training process. The company asserts that the entire training of DeepSeek-V3 was accomplished using approximately 2,788,000 GPU hours on the H800 platform, amounting to roughly $5.57 million. This figure is a fraction of the costs typically associated with training such advanced models, especially when compared to estimates suggesting expenditures of over $500 million for models like Llama-3.1.

This dynamic not only underscores DeepSeek’s commitment to innovation but could potentially democratize access to advanced AI technologies. By significantly lowering the barrier to entry, DeepSeek is positioned to foster a more competitive environment in the AI space, ultimately benefiting a wide array of enterprises.

In tandem with its innovative architecture, DeepSeek has conducted extensive benchmarks and performance evaluations against various models. The results confirm DeepSeek-V3’s competitive edge, particularly on math-centric benchmarks, where it recorded an impressive score of 90.2 on the Math-500 test—surpassing all but Anthropic’s Claude 3.5 Sonnet in other evaluations.

However, even while excelling in many areas, DeepSeek-V3 did experience limitations in specific tasks. In benchmarks related to SimpleQA and FRAMES, it lagged behind OpenAI’s GPT-4o, pointing to the nuanced strengths and weaknesses that various models present. Such findings emphasize the importance of continued innovation and refinements in AI models, particularly as demands for robust and effective solutions grow.

As DeepSeek ventures into the evolving realm of artificial general intelligence (AGI), the creation of DeepSeek-V3 heralds a new era for open-source technologies. By closing the gap with closed-source models, DeepSeek not only enhances competitiveness but also provides enterprises with diverse options for integrating AI into their operations. Such diversity can mitigate risks associated with reliance on a single dominant player in the AI industry.

Moreover, the availability of DeepSeek-V3 via GitHub under an MIT license fosters a collaborative environment, encouraging open innovation and community-driven developments. With testing platforms like DeepSeek Chat and an accessible API, businesses are offered substantial avenues to leverage this cutting-edge technology.

DeepSeek-V3 signifies a remarkable advancement in the open-source AI domain. Its strategic innovations, architectural design, and commitment to cost efficiency stand as a testament to the bright future of AI, where accessibility and competitive performance converge. As the industry continues to evolve, the implications of such developments will undoubtedly extend far beyond current applications, reshaping the technological landscape as we know it.