In an era where digital interaction often shapes young lives, Meta’s recent strategies to safeguard teen users reflect both a recognition of ongoing risks and a bold step toward greater responsibility. Their latest suite of protective features aims to cultivate a safer environment on Instagram, not merely by adding superficial barriers, but by empowering young users with knowledge, control, and swift action tools. Such initiatives demonstrate a deep understanding that protection is an ongoing process—not a one-time fix—and the tech giant’s efforts signal a shift toward prioritizing mental and emotional well-being over mere engagement metrics.

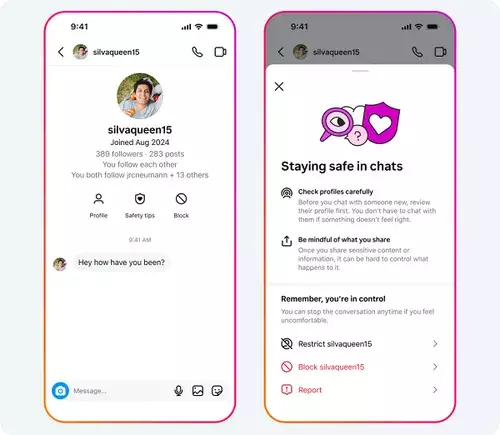

One of the most notable innovations is the introduction of in-chat “Safety Tips,” which serve as immediate, accessible guides. These prompts do more than just flag potential dangers—they serve as educational interventions right at the moment of interaction. By integrating these tips into casual conversations, Meta is subtly embedding safety awareness into natural social exchanges, making it less likely for teens to encounter scams or harmful behaviors unnoticed. The immediate blocking and report links enhance user empowerment, allowing them to take swift action against suspicious accounts without the cumbersome process previously involved. The simplicity of these tools signals a crucial understanding: boosting safety features is most effective when seamlessly integrated into everyday use.

Meta’s step to display contextual information—such as account creation dates—is another commendable move. Such transparency helps teens and their guardians better evaluate the credibility of account interactions, reducing the likelihood of falling prey to malicious actors. Furthermore, by combining block and report functions into a single gesture within direct messages (DMs), Meta streamlines safety protocols, reducing friction and encouraging proactive user engagement. These improvements recognize that digital safety is a matter of convenience as much as it is of policy.

Addressing Predatory Behaviors and Manipulation

The persistent challenge, however, lies in tackling the darker aspects of online interaction—particularly the predation involving adult-managed teen accounts. The numbers shared by Meta, with hundreds of thousands of accounts removed for sexualized comments or requests directed at minors, underscore a sobering reality: online predators are actively exploiting vulnerabilities in social media platforms. While these figures demonstrate Meta’s offensive efforts, they also expose the monumental scale of this issue. Protecting minors from such threats should be a fundamental priority, and Meta’s removal of suspicious accounts is a necessary, though insufficient, response.

Moreover, these measures highlight an ongoing dilemma: platform responsibility versus individual behavior. While technical bans and content moderation are essential, they cannot fully eliminate predators or harmful engagement. More profound cultural and educational shifts are needed—platforms should instill a deeper awareness that online safety is a shared responsibility. Meta’s inclusion of features like nudity protection, restricted messaging for minors, and location data safeguards exemplify a layered approach, but the battle against manipulative behaviors demands continuous evolution.

Balancing Innovation and Responsibility Amid Policy Changes

Beyond protective features, Meta’s support for raising the legal age of social media access across the European Union signals a recognition that policy environment matters just as much as technological safeguards. Advocating for a standardized “Digital Majority Age”—potentially raising the threshold to 16 or even 18—reflects a strategic alignment with public health and child protection interests. While such measures could curb early exposure to online harms, they also pose questions about digital inclusion. Limiting access might protect young users, but it can also disconnect adolescents during critical developmental stages.

Meta’s apparent backing of these policies suggests a nuanced approach—embracing safeguards while navigating the economic and social implications of restricting youth access. While some might see this as a corporate alignment with regulatory trends, it also signals an acknowledgment that digital platforms must evolve in tandem with societal expectations and legal standards. As social media increasingly becomes a fundamental part of young people’s lives, robust protective measures and clear age restrictions are a moral imperative, not just a regulatory box to check.

Meta’s recent initiatives highlight a pivotal shift: a recognition that the digital environment for teens must be fortified not just through reactive policing but through proactive education, streamlined controls, and strategic policy support. While not perfect, these moves acknowledge the moral responsibility platforms have to nurture safe online communities. The challenge remains—balancing innovation with protection, innovation with responsibility—yet the direction is unquestionably for a safer, more aware digital future.