In the rapidly evolving universe of artificial intelligence, where precision, speed, and versatility define competitive advantage, Google’s introduction of the Gemini Embedding model signifies a monumental leap forward. Achieving immediate prominence on the prestigious Massive Text Embedding Benchmark (MTEB), Gemini-embedding-001 asserts itself not merely as a market player but as a potential cornerstone for future AI applications. This model’s arrival prompts a critical examination of the strategic directions enterprises must consider—whether to adopt Google’s advanced proprietary system or explore the emerging open-source alternatives that are now gaining formidable momentum.

This model’s designation as a general-purpose powerhouse is not accidental. Designed with broad adaptability at its core, Gemini promises to streamline complex AI workflows across sectors—from legal and finance to engineering and beyond. Its performance dominance is, of course, compelling; yet, in the context of ever-competitive technology landscapes, real-world enterprise decisions weigh heavily on factors such as control, cost, and data sovereignty. The question isn’t merely which model ranks highest on benchmarks but rather which aligns best with a company’s strategic ambitions and operational constraints.

Embedding Technology: The Secret to Smarter Intelligence

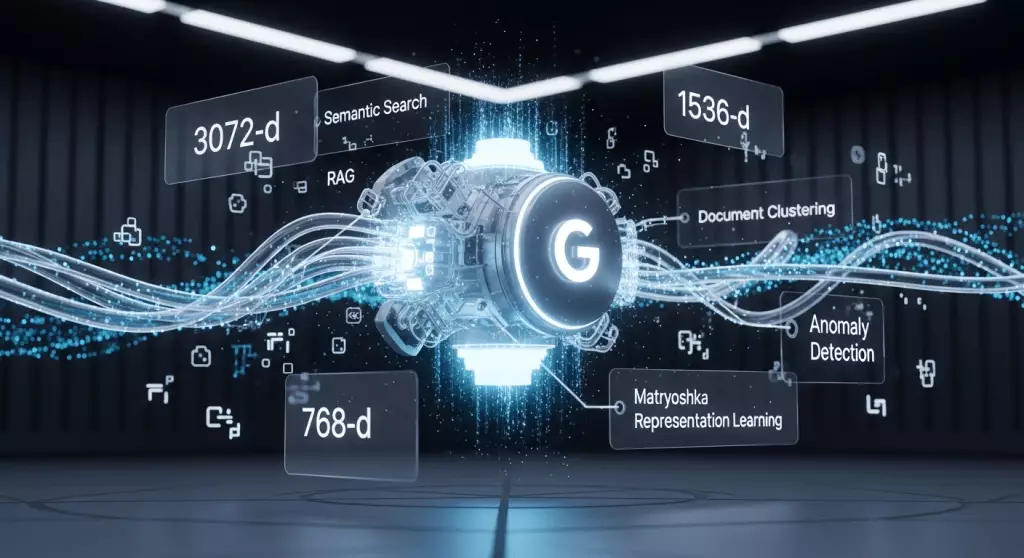

At the heart of Google’s Gemini lies a transformative approach to embedding technology—turning unstructured data into meaningful, actionable insights. Generating a numerical “map” of text, images, or multimedia data, embeddings establish a semantic proximity network. When two pieces of data possess similar meanings, their embeddings—represented as vectors—are closer in the multidimensional space. This simple yet powerful concept unlocks capabilities like sophisticated semantic search, context-aware document retrieval, and multimodal applications that integrate text, images, and audio seamlessly.

What sets Gemini apart is its innovative training technique known as Matryoshka Representation Learning (MRL). The model’s ability to produce highly detailed 3072-dimensional embeddings, with flexible truncation options down to 1536 or 768 dimensions, offers a level of adaptability that is rare in the field. Such flexibility is game-changing; businesses can balance the trade-offs between precision, computational load, and storage costs according to their specific needs. This design consideration indicates a deep understanding of enterprise requirements and hints at a future where AI models are not monolithic but customizable tools tailored to specific operational contexts.

Strategic Choices: Proprietary Dominance vs. Open-Source Innovation

Google’s Gemini is positioned as an “out-of-the-box” solution capable of functioning across diverse domains without the need for extensive fine-tuning. Its extensive language support—over 100 languages at a competitive price point—further bolsters its appeal as a global, scalable solution. However, dominance in benchmarks does not guarantee supremacy in deployment environments. The landscape teems with formidable contenders such as OpenAI and Cohere, each offering niche advantages.

OpenAI’s embeddings are now well-entrenched, primarily owing to the widespread integration of their models. Meanwhile, Cohere focuses on robustness in handling messy, real-world data—an essential feature for large enterprises managing vast and varied document repositories. Its ability to deploy on private clouds or even on-premises infrastructure appeals heavily to industries with strict regulatory requirements, such as healthcare and finance.

Yet, amidst these proprietary offerings, open-source models are increasingly challenging the status quo. Alibaba’s Qwen3-Embedding, distributed under an open license, provides a democratized alternative that doesn’t sacrifice performance. For organizations that prioritize data sovereignty, customization, and cost-efficiency, open-source models like Qwen3-Embedding or Qodo’s code-centric Embed-1-1.5B represent compelling options, especially since they can be integrated directly into existing infrastructure without vendor lock-in.

Implications for the Future: Control, Customization, and Ethical Considerations

The evolution from closed, API-driven models to open-source alternatives marks a pivotal shift in AI strategy. Proprietary models like Gemini offer unmatched convenience, seamless integration, and top-tier benchmark performance—particularly advantageous for organizations already embedded within Google Cloud ecosystems. Nonetheless, this convenience comes at the expense of control; reliance on API access limits customization, data handling transparency, and sovereignty.

Open-source embeddings empower enterprises to adapt models precisely to their unique data landscape, including domain-specific terminologies or specialized multimodal representations. This shift highlights an essential dialogue around ethical AI, privacy, and control; organizations increasingly demand the ability to audit, modify, and secure their AI assets, especially in regulated industries. Open models provide a platform for such disciplined, responsible AI development—an aspect that affluent proprietary services are often slow to address because of their commercial interests.

Furthermore, the competitive landscape underscores an underlying truth: no single model will dominate forever. As specialization grows—such as Mistral’s tailored code retrieval or domain-specific embeddings—the future of semantic understanding appears increasingly pluralistic. Enterprises must weigh their current needs against long-term strategic agility, balancing the allure of cutting-edge, all-in-one solutions with the empowerment of open, customizable frameworks.

Google’s Gemini Embedding heralds a new era of high-performance, flexible AI models that blur the lines between simplicity and sophistication. Yet, its rise also sharpens the debate about control, customization, and the democratization of AI technology. As market players and communities collide in this competitive arena, the ultimate winners will be those enterprises and developers who recognize that true AI innovation hinges not only on benchmark rankings but on the strategic choices they make today.