Large language models (LLMs) have become a revolutionary force in how we engage with artificial intelligence, enhancing our capacity to access information. However, these models often grapple with accuracy, particularly when they encounter questions outside their expertise. Researchers at the Massachusetts Institute of Technology (MIT) have taken steps towards addressing this issue by developing a specialized algorithm known as Co-LLM, which allows for more effective cooperation between different models.

In an era where information is abundant yet often fragmented, the power of collaborative intelligence has never been more crucial. The traditional model of LLMs, which operate in isolation, faces limitations in delivering precise and reliable answers. Much like a student reaching out to a knowledgeable friend to clarify complex issues, LLMs could benefit significantly from tapping into specialized models when needed. However, the challenge has been how to determine these moments for collaboration efficiently.

Given the complexity of human knowledge, crafting an algorithm that recognizes when to “phone a friend” has presented an ongoing challenge. Rather than relying on extensive labeled datasets or exceedingly intricate formulas to guide these collaborations, the MIT team has pioneered a more intuitive method through Co-LLM.

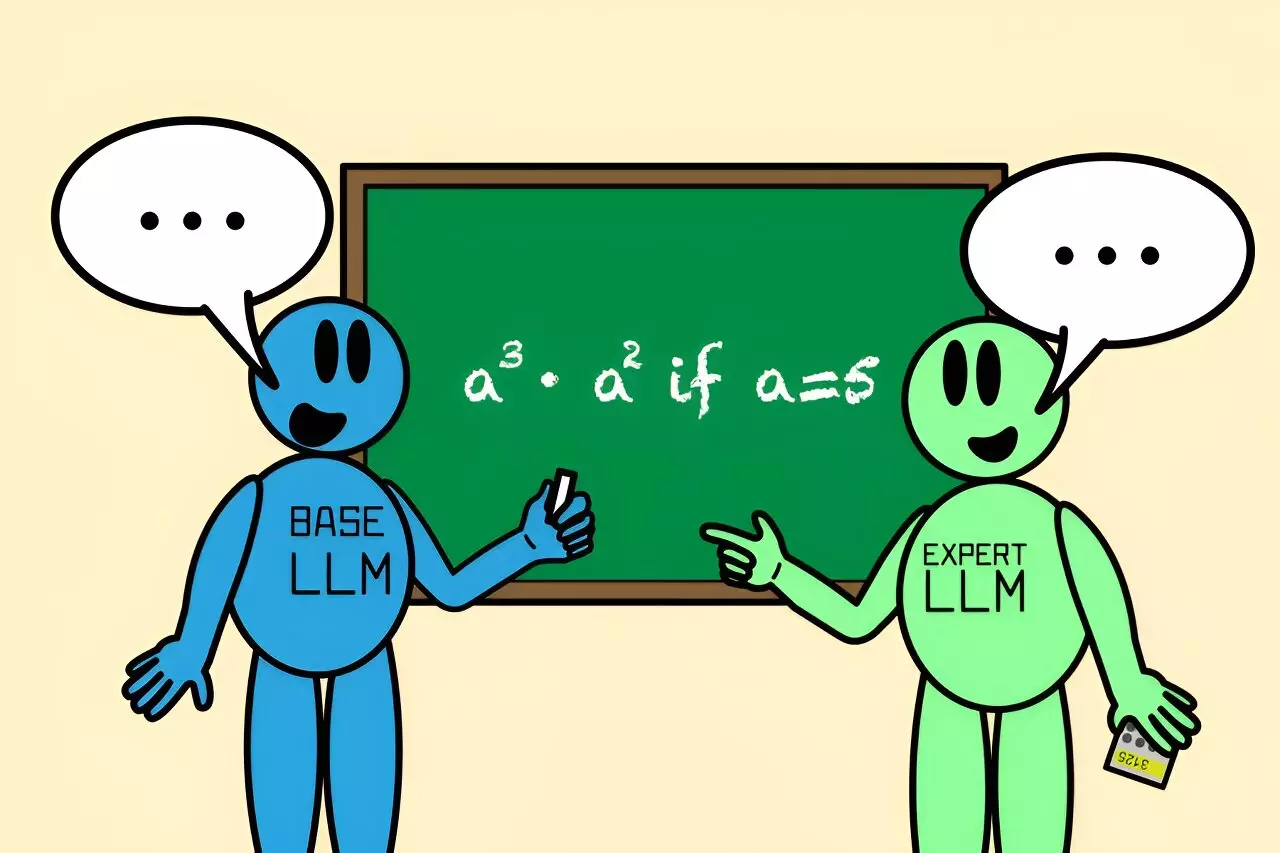

At its core, Co-LLM operates by pairing a general-purpose LLM with an expert model tailored to specific domains, such as medical or mathematical expertise. As the general-purpose model formulates its response, Co-LLM evaluates each word, identifying areas where the specialized model could lend a more accurate answer. This dynamic allows the base model to draft a response while simultaneously improving its accuracy by incorporating precise contributions from the expert model.

This collaboration is orchestrated by what MIT researchers refer to as a “switch variable.” This mechanism functions as a project manager within the context of the data generation process. It determines when the general-purpose model should seek assistance and helps in affixing specialist responses to the relevant portions of the initial draft. Essentially, this process mirrors human behavior—knowing when additional expertise is necessary to fill in knowledge gaps.

The flexibility of Co-LLM emerges vividly in its application to various scenarios. For instance, when asked about the ingredients in a specific prescription drug, a standard LLM may generate incorrect or incomplete information. By integrating an expert LLM specialized in biomedical knowledge, Co-LLM ensures that users receive more reliable answers.

Moreover, the algorithm boasts tangible improvements in accuracy. In one illustrative case, a general-purpose LLM incorrectly solved a math problem, resulting in a wrong answer of 125. However, with the influence of an expert math model, Co-LLM recalibrated the response to yield the correct outcome of 3,125. This highlights Co-LLM’s ability to outperform both standalone models and those that are not specifically fine-tuned for collaboration.

The implications of the Co-LLM methodology extend far beyond merely improving existing models. It opens the door to transforming how AI systems are trained and integrated into various applications, such as enterprise document management. By allowing Co-LLM to utilize the most current data available, businesses can ensure their outputs remain relevant and factual over time.

Additionally, this collaborative approach offers a pathway towards fostering self-correction capabilities within AI models. Future iterations may include mechanisms for backtracking or revising expert model contributions when incorrect answers are generated, thereby enhancing the system’s reliability. Such advancements may significantly elevate the operational effectiveness of AI technologies across multiple sectors.

One of the distinctions that sets Co-LLM apart from other collaborative models is its token-level routing capability. This granular approach allows the algorithm to assign responsibilities precisely—drawing on specialized knowledge on a per-word basis rather than requiring simultaneous contributions from all models involved. This not only boosts efficiency but also enhances the accuracy of information delivered to end-users, catering flexibly to the nuances of diverse inquiries.

As we broaden our understanding of artificial intelligence and its collaborative potentials, Co-LLM represents a substantial step forward. By synthesizing general knowledge and specialized information organically, it demonstrates a transformative approach to multi-model communication. The foundation laid by MIT’s CSAIL may pave the way for future innovations that leverage AI’s vast capabilities while ensuring accuracy and efficiency in the intricate tapestry of human knowledge.