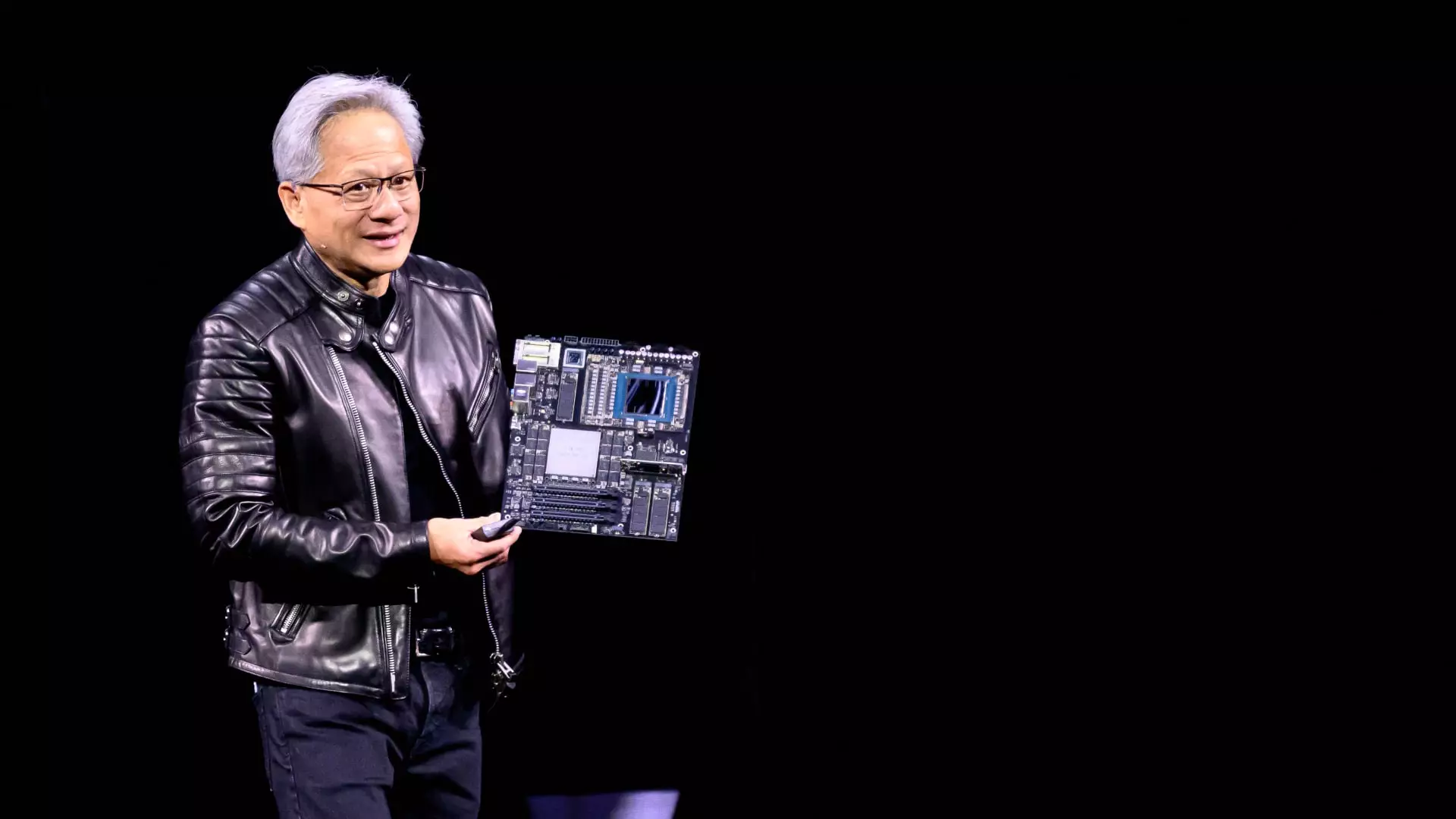

In the dynamic realm of technology, few names resonate with resounding impact like Nvidia. During a groundbreaking keynote at the GTC conference, CEO Jensen Huang laid out a compelling vision focused on what might very well define the next decade: speed. In an era where artificial intelligence (AI) is rapidly evolving, Huang underscored a pivotal mantra—acquiring the fastest chips available. His assertion resonates deeply in an industry grappling with the dual pressures of skyrocketing demand and resource constraints. The clear implication? Fast chips are not just an advantage; they are essential for sustained competitive performance.

Huang’s presentation traversed beyond optimistic proclamations—it delved into the raw economics of technology investment. He highlighted that with the introduction of faster chips, concerns regarding cost and return on investment would dissipate. This belief spurred a revolutionary model where chips could be “digitally sliced” to seamlessly serve AI applications to vast user bases simultaneously. The crux of his argument hinged on a singular point: in a rapidly evolving market, enhancing performance translates directly to cost efficiency. This perspective isn’t merely visionary; it is a calculated approach that aligns with the priorities of cloud and AI companies that are at the forefront of technological advancement.

Catalysts for Change: The Blackwell Ultra Systems

Offering a glimpse into the future, Nvidia introduced its Blackwell Ultra systems, a step forward that promises to redefine the nature of data processing. Huang boldly claimed these systems could generate up to 50 times more revenue than their predecessors due to significantly enhanced operational speed. For financial analysts and tech investors alike, this claim is weighty, laden with the promise of transformative returns on investment.

The calculus that Huang shared during his keynote—a detailed breakdown of cost-per-token aimed at AI output—serves to demystify complex financial metrics for stakeholders in the hyperscale cloud sector. Such transparency not only reflects an understanding of market concerns but also reinforces Nvidia as the gold standard amidst growing competition. With each Blackwell GPU reportedly costing around $40,000 and a staggering three million units already sold, Nvidia is solidifying its foothold in the AI space at an unprecedented pace.

The Uncertain Terrain of Custom Chips

As Huang addressed the shifting landscape of AI infrastructure spending, he remained skeptical about the burgeoning trend of custom chips developed by major cloud providers. The traditional player-versus-player dynamics may soon evolve, with these custom chips emerging as potential competitors to Nvidia’s GPUs. However, Huang quickly dismissed their viability, citing concerns over flexibility and adaptability. He provided an unvarnished reality check—many custom chip projects often never make it to market, and among those that do, success is not guaranteed.

This open skepticism underscores a critical point: designing chips that are superior to existing options is a monumental challenge. Huang’s assertion that “the ASIC still has to be better than the best” is particularly prescient. His focus remains steadfast on ensuring that enterprises capitalize on Nvidia’s state-of-the-art systems for their expansive projects. The narrative crafted here is one of dominance, innovation, and a steadfast commitment to pushing the boundaries of what is technologically achievable.

The Road Ahead: Strategic Long-Term Vision

Nvidia’s release of a roadmap extending to 2028 is a strategic maneuver aimed at instilling confidence in its cloud partners, many of whom are on the cusp of investing billions into AI infrastructure. Huang emphasized the urgency with which these firms must consider their technological partners, reiterating that significant allocations have already been approved—money that cannot be idly placed on the sidelines. They are looking for clarity to steer their monumental investments, and Nvidia is positioning itself as a paramount ally.

With Huang’s forward-looking view, it’s evident that the journey ahead is fraught with both opportunity and challenge. As sectors become increasingly reliant on AI capabilities, the next wave of innovation is set to converge at the intersection of speed and cost efficiency. It is in this climate that Nvidia stands ready, not merely to adapt, but to lead. The fervor in Huang’s voice echoed a sentiment that is critical: the race for advanced computational speed is just beginning, and Nvidia is determined to lead the charge.