Nvidia has taken a significant step toward transforming the AI landscape by open-sourcing essential components of its Run:ai platform, notably the KAI Scheduler. This Kubernetes-native GPU scheduling solution, now distributed under the Apache 2.0 license, marks a critical juncture for enterprises and developers alike. By making KAI Scheduler accessible to the broader community, Nvidia is not only reinforcing its commitment to open-source initiatives but also promoting a collaborative ecosystem that encourages innovation and constructive feedback. This initiative effectively opens the floodgates for contributions from AI practitioners worldwide, reflecting a shift toward a more inclusive approach in AI infrastructure development.

Understanding the Challenges of AI Workloads

As machine learning (ML) and artificial intelligence (AI) evolve, so too do the complexities of managing workloads that deploy intensive computational resources. The conventional resource schedulers typically fall short in meeting the nuanced demands of AI tasks, which often involve fluctuating GPU and CPU usage patterns. These traditional systems lack the adaptability required to cater to the dramatically varying needs of AI workloads, where, for instance, a single GPU might suffice for an initial data exploration session, but multiple GPUs could be needed abruptly for comprehensive training of models. This inconsistency poses a hurdle that KAI Scheduler aims to dismantle by offering a solution that recalibrates resources in real time.

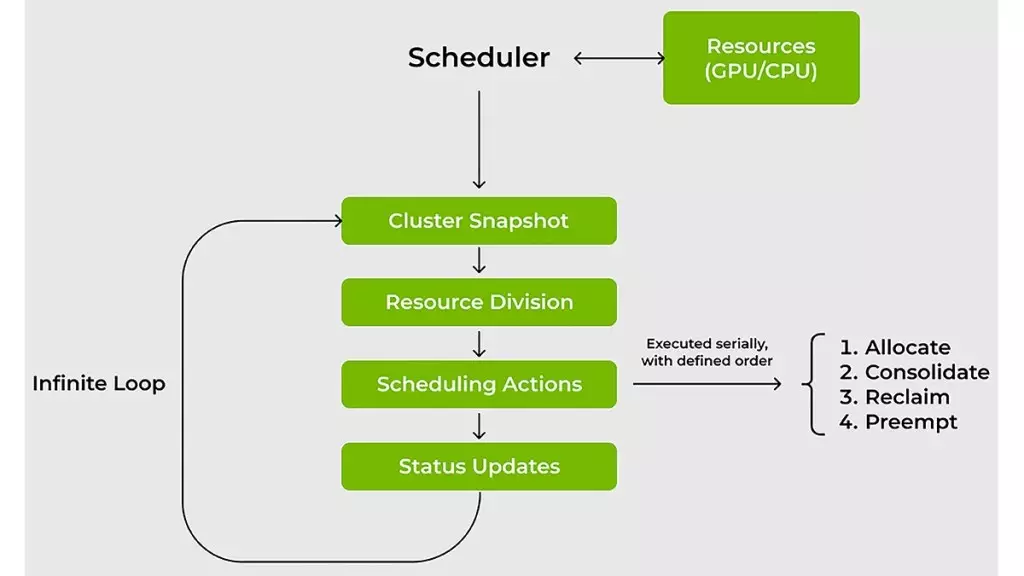

The Dynamic Nature of KAI Scheduler

What sets KAI Scheduler apart is its dynamic recalibration mechanism. Unlike its predecessors, this innovative tool continuously assesses and adjusts fair-share values based on current workload demands. This feature significantly reduces the waiting times for compute access, mitigating the frustration that often hinders productivity among ML and IT teams. The scheduler’s design employs sophisticated strategies, such as gang scheduling and hierarchical queuing, to facilitate efficient job submissions. This automated, hands-off approach empowers engineers to efficiently manage their tasks while reclaiming precious time for critical thinking and experimentation.

Innovative Resource Management Strategies

Nvidia’s KAI Scheduler employs two compelling strategies for optimizing resource utilization: bin-packing and workload spreading. By combating resource fragmentation, bin-packing enables smaller tasks to be consolidated into GPUs and CPUs that may otherwise sit partially idle, ensuring that computational power is not wasted. Simultaneously, workload spreading disperses tasks across multiple nodes, balancing resource loads and maximizing overall system efficiency. This strategic duality is vital in avoiding scenarios where some teams hoard resources unnecessarily, a common practice that hinders collaborative efforts and leads to inefficiency in shared clusters.

Maximizing Cluster Efficiency with Resource Guarantees

One of the notable advancements offered by KAI Scheduler is its enforcement of resource guarantees. This feature addresses a perennial concern in AI resource management: ensuring that allocated GPUs are readily available for the teams that need them. By dynamically reallocating idle resources to other workloads, KAI Scheduler not only prevents resource hogging but also augments overall cluster efficiency. In today’s highly competitive AI landscape, the ability to secure and maintain access to necessary computational resources can significantly dictate project timelines and outcomes.

Simplifying the Integration of AI Frameworks

Traditional workflows often present daunting complexities when integrating diverse AI frameworks with the scheduling process. Researchers and engineers find themselves navigating a convoluted landscape of configurations needed to ensure seamless interaction among tools like Kubeflow, Ray, and Argo. The introduction of KAI Scheduler mitigates this challenge by incorporating a built-in podgrouper that streamlines these integrations. This simplification not only accelerates the development process but also lowers the barrier to entry for teams keen on leveraging various AI technologies without the overhead of extensive configuration.

Nvidia’s KAI Scheduler stands as a transformative tool that not only addresses the pressing challenges of AI workload management but also embodies the essence of collaboration and community advancement in technology. Through its innovative features and commitment to open-source principles, Nvidia is paving the way for a future where AI solutions are more accessible, efficient, and effective for everyone involved.