As we advance through the age of artificial intelligence, the startling rise of large language models (LLMs) has captured the spotlight. These models, boasting hundreds of billions of parameters, represent a leap in sophistication, allowing for enhanced pattern recognition and improved accuracy in tasks ranging from language translation to content generation. However, this power comes at a significant ecological and financial cost. The staggering amounts of computational resources required to train and maintain these models beg the question: is bigger truly better? As a counterpoint, small language models (SLMs) are emerging as viable contenders, offering practical alternatives that challenge the traditional narrative of AI dominance by sheer size.

The Cost of Overengineering

The allure of LLMs lies in their complexity. Google’s Gemini 1.0 Ultra model, for instance, reportedly set back its creators by $191 million. Such exorbitant costs extend beyond initial training; the operational energy consumption is equally staggering. Research reveals that inquiries to popular platforms like ChatGPT utilize around ten times the energy of a straightforward Google search. This raises an urgent question of sustainability in AI, making a compelling case for a reassessment of the frameworks we build upon. With the technological landscape under pressure to reduce its carbon footprint, the time is ripe for smaller, more efficient models that preserve performance while curbing resource requirements.

The Small Model Revolution

In response to problems associated with LLMs, tech giants like IBM and Microsoft are pivoting towards SLMs, which utilize only a few billion parameters. Despite their seemingly limited capacity, these models are finding success in niche applications such as healthcare chatbots or data aggregation for smart devices. Zico Kolter from Carnegie Mellon University presents a thought-provoking perspective: “For a lot of tasks, an 8 billion-parameter model is actually pretty good.” This assertion challenges long-held beliefs about the necessity of sprawling models, suggesting that efficiency can coexist with effectiveness.

Without the Noise: Quality Data Over Quantity

One of the defining characteristics of these smaller models is their training methodology. Traditional models often wrestle with vast amounts of messy, unfiltered data scraped from the internet, which complicates the learning process. In contrast, knowledge distillation enables SLMs to inherit the strengths of larger models by using a carefully curated dataset. This process can be likened to an educational framework where a seasoned expert imparts knowledge to a novice—an efficient transfer of understanding that affords SLMs the ability to perform remarkably well with minimal input. As Kolter notes, “the reason these SLMs get so good…is that they use high-quality data.”

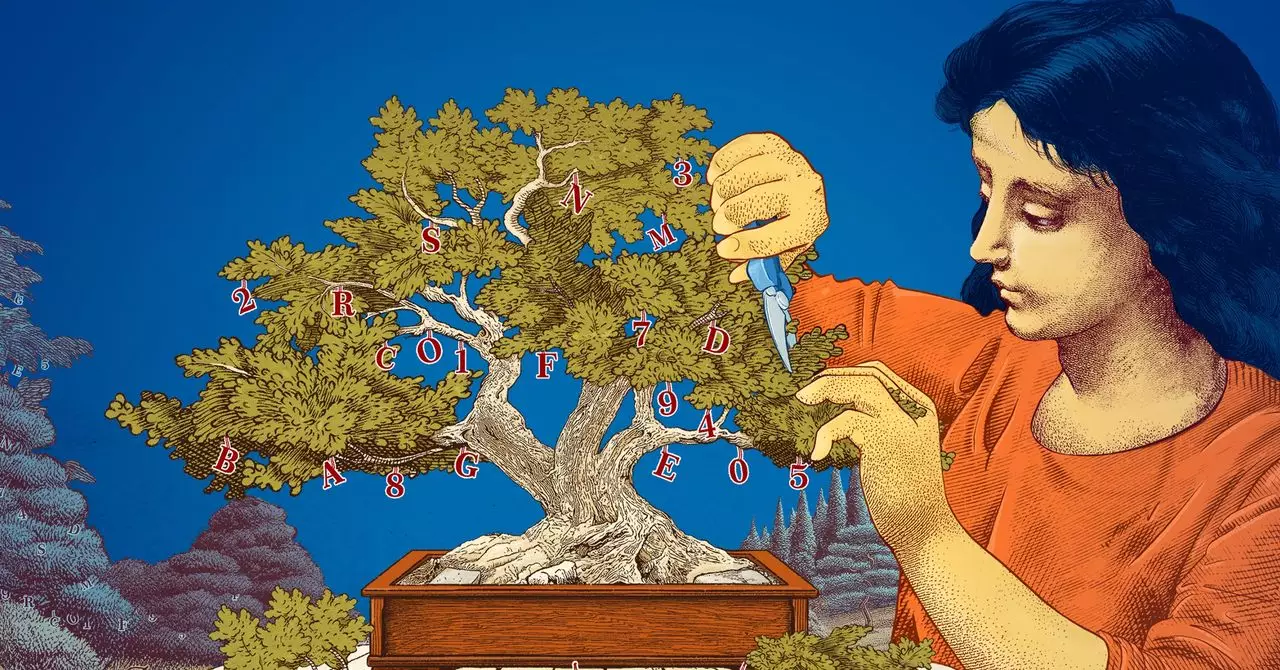

Trimming the Fat: The Art of Optimization

Beyond the element of data quality, the optimization of SLMs often employs techniques such as pruning, which structurally addresses inefficiencies within neural networks. Inspired by the adaptive characteristics of the human mind, pruning entails excising connections deemed superfluous. This method resonates with Yann LeCun’s pioneering concept of “optimal brain damage,” suggesting that a significant portion of neural connections might be rendered redundant without compromising functionality. This refining process enhances performance in specialized contexts while simultaneously lowering resources required for both training and real-time application.

Empowering Innovation Through Modularity

The drive towards smaller language models also presents an invaluable opportunity for researchers. The lower stakes involved in SLM experimentation facilitate a culture of innovation, allowing researchers to explore novel ideas without the fear of wasting extensive resources. As Leshem Choshen from the MIT-IBM Watson AI Lab aptly puts it, “small models allow researchers to experiment with lower stakes.” This fosters a nimble research environment that could pave the way for groundbreaking advancements in AI.

A Balanced Future for AI

While LLMs will undoubtedly retain their relevance in broader applications, the emergence of SLMs offers a fresh landscape to navigate. These streamlined models not only democratize access to AI technology—allowing engineers and developers to implement advanced functionalities in everyday devices—but they are also kinder to our planet. With the capability to generate efficient models that save time, resources, and costs, SLMs hold the potential to shape a future of AI that is both powerful and sustainable.

In a world increasingly conscious of its environmental footprint, the message is clear: sometimes, less truly is more. The pursuit of innovation should not solely rest in grandiose visions; practicality and intentionality can lead to equally revolutionary outcomes in the realm of artificial intelligence.